Joshua Allen, 'So You Think You Can Dance' Winner, Dies at 36: What We Know About His Passing and Legacy

The news arrives like a system error, a line of code that simply reads: `PROCESS_TERMINATED`. Joshua Allen, a name that for a moment represented a pinnacle of kinetic potential, is gone at 36. To most, this is a sad, brief headline. A flicker of celebrity tragedy soon to be replaced by the next.

But I look at this, and I don’t just see a headline. I see a critical failure in a system we’ve all built. I see a ghost in the machine.

For those who don’t remember, let’s rewind to 2008. The system was a television show, “So You Think You Can Dance,” a meritocracy of muscle and motion designed to identify and elevate raw talent. And Joshua Allen was its chosen one. I remember watching that finale, and I honestly felt like I was seeing the future of movement. He was a hip-hop dancer, but that label feels so inadequate now. He moved with a kind of liquid electricity, a human special effect. He wasn’t just executing choreography; he was solving a complex physical problem in real-time, and the solution was breathtaking.

That win was the system working perfectly. It took a raw input—a gifted human—and processed him into a star. The speed of his ascent was just staggering—it meant the gap between obscurity and universal recognition was closing faster than we could even comprehend, a feedback loop of public adoration and undeniable talent. He stood on that stage, the victor over another incredible talent, Stephen “tWitch” Boss, another bright light who would also be extinguished far too soon. In that moment, the algorithm of fame seemed flawless. It promised a simple, linear progression: talent, plus exposure, equals a successful, upward trajectory. A beautiful equation.

But we keep forgetting the most important variable. We forget the human algorithm.

When the Human Algorithm Glitches

When the User Deviates

I call it the human algorithm—in simpler terms, it’s that messy, beautiful, unpredictable, and often broken source code that powers every single one of us. It’s our capacity for brilliance and our capacity for darkness, our resilience and our fragility. It’s the one thing that cannot be perfectly modeled, predicted, or controlled. And it is the one thing our modern systems of fame and public scrutiny are least equipped to handle.

The public record shows the glitch. Film credits in “Step Up 3D” and “Footloose”—the system humming along as expected. Then, a domestic violence conviction in 2017. A year in jail. The clean, elegant narrative of the rising star suddenly hit a compile-time error. It was a deeply troubling and ugly deviation from the script.

And this is where we have to pause. This is our moment of ethical consideration. It is not our place to excuse or ignore the harm done. But as architects of systems—social, digital, or otherwise—it is absolutely our responsibility to ask what happens when the human at the center of our creation reveals their full, complicated, and flawed humanity. Our celebrity machine is designed to process idols, not complex people. It has no protocol for this. It simply flags the user for deletion. What responsibility do we have for the fallout when the person we elevated so high comes crashing down?

We are building artificial intelligences designed to emulate human creativity, social networks designed to mediate human connection, and digital worlds to house human experiences. We spend trillions of dollars and countless hours perfecting the code for the systems, but almost no time understanding the chaotic, fragile, and magnificent code of the humans who will use them. It’s like designing a warp drive without understanding the laws of physics. We’re building the vessel without respecting the passenger.

The details of Joshua Allen’s death remain, as the reports say, unrevealed. It’s the final black box. The ultimate, unreadable line of code. The silence from the public isn't malice; it's the sound of a system that doesn't know what to do with a story that doesn't fit its template. It’s a story of triumph, tragedy, and wrongdoing, all at once. It’s a human story. And our machines have no output for that.

This isn’t so different from the dawn of the Industrial Revolution. We built magnificent engines of steel and steam, capable of unprecedented productivity. We celebrated the machines, the efficiency, the output. And it took us decades to realize we had forgotten to account for the human cost—the pollution, the unsafe conditions, the lives broken by the very systems designed to improve them. We are making the same mistake again, only this time the factories are digital and the product is people.

So what do we do? Do we stop building? Do we abandon the pursuit of progress? Of course not. But we must build differently. We must start designing our systems not for the idealized, perfect user, but for the real one. For the human algorithm, in all its messy glory.

The Ghost in Our Machine

We are building a future of incredible, intelligent systems. But this story is a stark reminder of a fundamental truth we cannot afford to forget. The most complex, most powerful, and most important processor in any system we create will always be the human being at its center. And if we design for the machine but forget the ghost, all our work will be for nothing.

Reference article source:

-

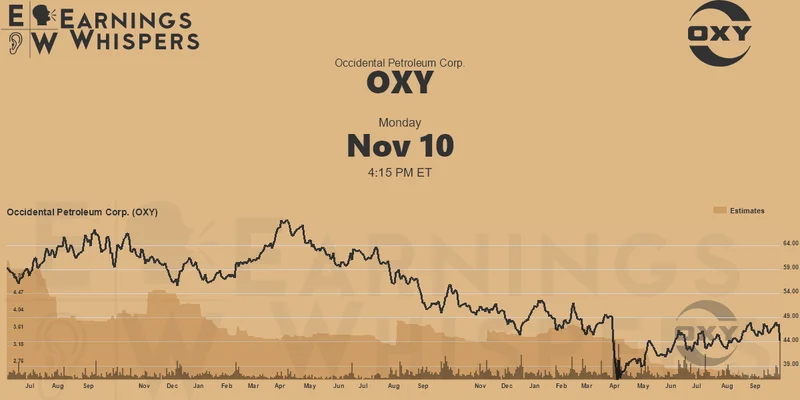

Warren Buffett's OXY Stock Play: The Latest Drama, Buffett's Angle, and Why You Shouldn't Believe the Hype

Solet'sgetthisstraight.Occide...

-

The Great Up-Leveling: What's Happening Now and How We Step Up

Haveyoueverfeltlikeyou'redri...

-

The Future of Auto Parts: How to Find Any Part Instantly and What Comes Next

Walkintoany`autoparts`store—a...

-

Applied Digital (APLD) Stock: Analyzing the Surge, Analyst Targets, and Its Real Valuation

AppliedDigital'sParabolicRise:...

-

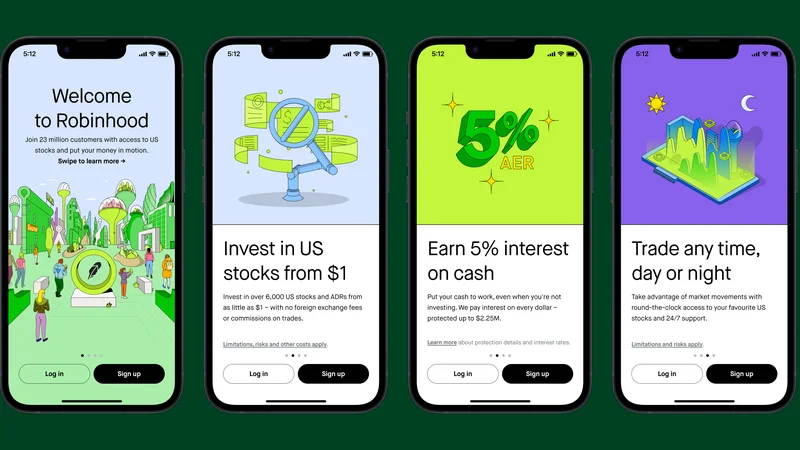

Analyzing Robinhood: What the New Gold Card Means for its 2025 Stock Price

Robinhood's$123BillionBet:IsT...

- Search

- Recently Published

-

- DeFi Token Performance & Investor Trends Post-October Crash: what they won't tell you about investors and the bleak 2025 ahead

- Render: What it *really* is, the tech-bro hype, and that token's dubious 'value'

- APLD Stock: What's *Actually* Fueling This "Big Move"?

- Avici: The Real Meaning, Those Songs, and the 'Hell' We Ignore

- Uber Ride Demand: Cost Analysis vs. Thanksgiving Deals

- Stock Market Rollercoaster: AI Fears vs. Rate Hike Panic

- Bitcoin: The Price, The Spin, & My Take

- Asia: Its Regions, Countries, & Why Your Mental Map is Wrong

- Retirement Age: A Paradigm Shift for Your Future

- Starknet: What it is, its tokenomics, and current valuation

- Tag list

-

- Blockchain (11)

- Decentralization (5)

- Smart Contracts (4)

- Cryptocurrency (26)

- DeFi (5)

- Bitcoin (31)

- Trump (5)

- Ethereum (8)

- Pudgy Penguins (6)

- NFT (5)

- Solana (5)

- cryptocurrency (6)

- bitcoin (7)

- Plasma (5)

- Zcash (12)

- Aster (10)

- nbis stock (5)

- iren stock (5)

- crypto (7)

- ZKsync (5)

- irs stimulus checks 2025 (6)

- pi (6)

- hims stock (4)

- kimberly clark (5)

- uae (5)