The Sam Altman Narrative: Deconstructing the Vision, the Quotes, and the Controversy

The Trillion-Dollar Arbitrage of Sam Altman

Let's set aside the philosophy for a moment and look at the capital flows. In the world of high finance, the most lucrative opportunities often lie in the gap between perception and reality. You find an asset the market misunderstands and you place a leveraged bet on the eventual correction. My analysis suggests Sam Altman is running a similar play, but on a civilizational scale. The asset isn’t a company; it’s the future of information. And the arbitrage is between the story he tells and the money he spends.

The numbers are, frankly, staggering. In just the past few weeks, OpenAI has orchestrated a series of blockbuster computing deals, primarily with chip giants Nvidia and AMD, bringing its total dealmaking this year to a staggering figure (reported at approximately $1 trillion). This isn't venture capital; this is nation-state-level industrial planning. The company is on track to spend an estimated $155 billion through 2029, a burn rate that makes the dot-com era look like a rounding error.

This isn’t the behavior of a company exploring a new technology. This is the behavior of a company executing a blitzkrieg for platform dominance. The goal is to secure the supply chain—the raw computational power—so completely that rivals are choked out before they can even scale. It's a classic monopolistic play, one that prioritizes market capture over immediate profitability or, it seems, ethical deliberation.

Indeed, as one report puts it, Tech CEOs marvel — and worry — about Sam Altman's dizzying race to dominate AI. Aaron Levie of Box likens it to the dawn of the internet, a platform shift that comes once a generation. He’s right about the scale, but I think the analogy is flawed. The architects of the early internet weren’t simultaneously trying to raise a trillion dollars to control its core infrastructure from day one. What we're witnessing is an attempt to skip the messy, decentralized "early days" and jump straight to a consolidated, privately-owned ecosystem. The sheer speed is a strategy in itself, designed to outrun regulation, public debate, and any meaningful competition. The question isn't whether it's bold. The question is whether the underlying asset can possibly justify the valuation.

The Narrative as a Hedging Instrument

This is where the second part of the arbitrage comes in: the narrative. When you’re deploying capital at this velocity, you create immense risk. You risk public backlash over copyright, societal panic over job displacement, and regulatory scrutiny over safety. A rational actor would mitigate these risks with clear policies and cautious rollouts. Instead, Altman appears to be using a carefully crafted, often contradictory, public narrative as his primary hedging instrument.

Consider his commentary on job losses. When pressed on the potential for AI to eliminate millions of knowledge-worker jobs, he doesn't offer a data-driven forecast or a policy proposal. He offers a folksy analogy about a farmer from 50 years ago who wouldn't recognize our modern jobs as "real work." This isn't an analysis; it's a dismissal. It’s a rhetorical tool designed to reframe a tangible economic threat as a philosophical inevitability, absolving the creator of any direct responsibility. It’s remarkably effective at shutting down conversation by implying that anyone worried about their livelihood simply lacks imagination.

Then there’s the issue of copyright, brought to a head by the release of Sora 2. The model’s ability to generate video is impressive, but it’s built on a foundation of what appears to be wholesale data ingestion without permission. When confronted, OpenAI’s motto seems to be "we’ll do what we want and you’ll let us." Yet, when another AI company, DeepSeek, was suspected of training on OpenAI’s models, the response was swift and severe: "We take aggressive, proactive countermeasures to protect our technology." This isn't hypocrisy; it's a clear statement of intent. In Altman’s world, there are two classes of intellectual property: OpenAI’s, which is sacrosanct, and everyone else’s, which is raw material.

And this is the part of the report that I find genuinely puzzling, and most indicative of the strategy. Both Altman and Meta’s Mark Zuckerberg have begun publicly warning about an AI "bubble," a move that led to headlines like Goodbye to AI – Meta CEO Mark Zuckerberg joins Sam Altman and acknowledges that artificial intelligence could be on a bubble. On the surface, this looks like responsible leadership. But I've analyzed countless CEO transcripts during periods of market euphoria, and this specific tactic—publicly acknowledging the bubble while privately accelerating spend—is a classic, and often effective, strategy. It projects an image of sober wisdom, positions them as the eventual survivors when the "correction" inevitably wipes out smaller, less-capitalized competitors, and frames their continued massive investment as a sign of true, long-term belief. It’s a way to cheerlead the mania and simultaneously position yourself to profit from its collapse.

A Calculated Asymmetry

My final analysis is this: Sam Altman is not a philosopher king or a reckless villain. He is executing one of the most audacious trades in history, built on a fundamental asymmetry. The capital he is deploying is concrete, measured in trillions of dollars and exaflops of compute. The risks, however—copyright infringement, mass unemployment, societal destabilization—are being deliberately kept abstract, pushed into the realm of philosophical debate and long-term inevitability. He is spending real money to build a hard-edged monopoly while selling a soft-focus story about the future of humanity. The ultimate goal is to create a platform so essential, so deeply embedded in the global economy, that its negative externalities become problems for society to solve, while its profits remain entirely private. The bubble isn't a risk to his plan; it's a feature.

-

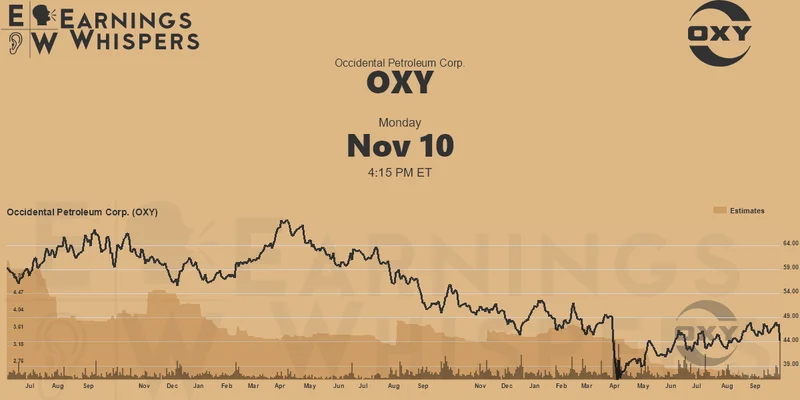

Warren Buffett's OXY Stock Play: The Latest Drama, Buffett's Angle, and Why You Shouldn't Believe the Hype

Solet'sgetthisstraight.Occide...

-

The Great Up-Leveling: What's Happening Now and How We Step Up

Haveyoueverfeltlikeyou'redri...

-

The Future of Auto Parts: How to Find Any Part Instantly and What Comes Next

Walkintoany`autoparts`store—a...

-

Applied Digital (APLD) Stock: Analyzing the Surge, Analyst Targets, and Its Real Valuation

AppliedDigital'sParabolicRise:...

-

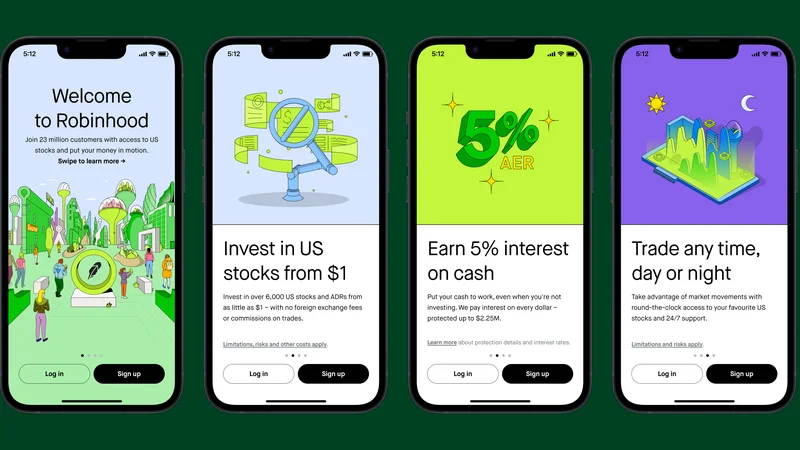

Analyzing Robinhood: What the New Gold Card Means for its 2025 Stock Price

Robinhood's$123BillionBet:IsT...

- Search

- Recently Published

-

- DeFi Token Performance & Investor Trends Post-October Crash: what they won't tell you about investors and the bleak 2025 ahead

- Render: What it *really* is, the tech-bro hype, and that token's dubious 'value'

- APLD Stock: What's *Actually* Fueling This "Big Move"?

- Avici: The Real Meaning, Those Songs, and the 'Hell' We Ignore

- Uber Ride Demand: Cost Analysis vs. Thanksgiving Deals

- Stock Market Rollercoaster: AI Fears vs. Rate Hike Panic

- Bitcoin: The Price, The Spin, & My Take

- Asia: Its Regions, Countries, & Why Your Mental Map is Wrong

- Retirement Age: A Paradigm Shift for Your Future

- Starknet: What it is, its tokenomics, and current valuation

- Tag list

-

- Blockchain (11)

- Decentralization (5)

- Smart Contracts (4)

- Cryptocurrency (26)

- DeFi (5)

- Bitcoin (31)

- Trump (5)

- Ethereum (8)

- Pudgy Penguins (6)

- NFT (5)

- Solana (5)

- cryptocurrency (6)

- bitcoin (7)

- Plasma (5)

- Zcash (12)

- Aster (10)

- nbis stock (5)

- iren stock (5)

- crypto (7)

- ZKsync (5)

- irs stimulus checks 2025 (6)

- pi (6)

- hims stock (4)

- kimberly clark (5)

- uae (5)