Morgan Stanley: What It Is, How It Works, and Your Account Login

Are You a Robot? Wall Street Is Asking, and Your Browser Is Answering.

You’ve seen the message a thousand times. It’s the digital equivalent of a chain-link fence, a sterile and unavoidable checkpoint on the way to your own information. "Please make sure your browser supports JavaScript and cookies and that you are not blocking them from loading." It’s so common, so utterly banal, that we click past it without a second thought.

That is a mistake.

This message isn’t just a technical prerequisite. It’s the quiet admission of a new form of interrogation. In the world of high finance, particularly at institutions like Morgan Stanley or its competitors Schwab and Fidelity, that simple browser check is the tip of a massive data-collection iceberg. The system isn't just asking if your browser works; it's asking who you are, where you've been, and what you're worth. And it’s making a judgment before you ever type in a password.

The Anatomy of a Digital Handshake

When you attempt to access your Morgan Stanley online portal, a complex and instantaneous transaction occurs that has nothing to do with your money. Your browser reaches out to their server, and the server asks for its papers. This isn't a simple "hello." It's a deep scan.

The server is cataloging your digital fingerprint. This includes your IP address, your operating system, screen resolution, browser version, language settings, and even the list of fonts installed on your machine (a surprisingly unique identifier). It’s checking for the tell-tale signs of a VPN or a Tor browser. It’s analyzing the way you move your mouse on the page—the subtle, subconscious patterns of acceleration and hesitation that separate a human hand from a bot’s script. This entire dossier is compiled and cross-referenced in milliseconds.

The stated purpose, of course, is security. For a firm managing trillions in assets, preventing automated attacks and account takeovers is a non-negotiable priority. They need to stop a botnet in Russia from testing a million stolen passwords against the Morgan Stanley client login page. And on that front, these systems are remarkably effective. They are the silent sentinels protecting the digital vaults.

But the data collected for security doesn't just get thrown away after the check is complete. That would be inefficient. And if there's one thing these institutions are not, it's inefficient. This security data becomes the foundation for a much more interesting and opaque process: client stratification.

I've looked at hundreds of these data-flow diagrams in my career, and this is the part of the process that I find genuinely puzzling from a transparency standpoint. The system is analyzing dozens of data points—no, likely hundreds, to be more exact—to build a profile that informs not just security, but also the user's entire experience.

The Score You Don't Know You Have

Think of this process as a bouncer at an exclusive club. The bouncer’s first job is to check your ID to make sure you’re of age and not on a banned list. That’s the security function. But he’s also looking at your shoes, your watch, how you carry yourself. He's making a split-second judgment about whether you belong in the general admission area or the VIP lounge with bottle service.

The data from your browser check feeds into an invisible trust score. A longtime Morgan Stanley wealth management client logging in from their home Wi-Fi on a known device with a consistent behavioral pattern? They're a VIP. The system rolls out the red carpet—a frictionless login, maybe even a customized dashboard. Their digital handshake is firm and familiar.

Now, consider a different user. Someone trying to access their E*TRADE Morgan Stanley account from a hotel Wi-Fi network in a different country, using a brand-new laptop. The system sees a collection of red flags. The IP address is new, the device is unknown, the browser history is clean (often a sign of a sterile virtual machine used for hacking). This user doesn't get the red carpet. They get the chain-link fence: multi-factor authentication, security questions, maybe even a temporary account lock. The system is effectively saying, "I don't know you, and I don't trust you."

This makes perfect sense from a risk-management perspective. The problem is the opacity. What factors are weighted most heavily? How does this score evolve over time? Is a user penalized for using privacy-enhancing tools? We have no idea. There is no FICO score for digital trust. You are being judged by a proprietary algorithm whose rules are a closely guarded trade secret.

And what happens when that algorithm gets it wrong? A legitimate user, perhaps a less tech-savvy one, gets locked out, while a sophisticated fraudster who knows how to mimic a "trusted" digital signature (a process known as spoofing, which is a constant cat-and-mouse game) gets in. The system’s reliance on these passive data points creates a new, invisible barrier to access that is entirely outside the user’s control. Are you more likely to get a call about new investment products if your digital profile flags you as a high-net-worth individual? Does your customer service experience change based on this score? These are the questions that these firms are, for obvious reasons, not eager to answer.

Your Digital Exhaust Is Their Alpha

Ultimately, the sterile prompt about cookies and JavaScript is a polite fiction. The real question being asked is, "Based on your data exhaust, what is your probable value and risk to our firm?" Your digital identity is no longer separate from your financial one; it is a leading indicator. The passive data you generate simply by existing online has become a crucial input for the risk and marketing models at the heart of modern finance. The line between protecting a client and profiling them has been erased. In this new paradigm, you are your data, and Wall Street is always watching.

-

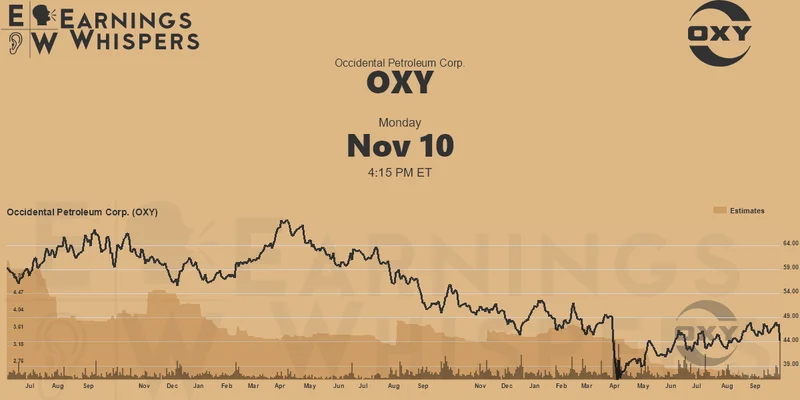

Warren Buffett's OXY Stock Play: The Latest Drama, Buffett's Angle, and Why You Shouldn't Believe the Hype

Solet'sgetthisstraight.Occide...

-

The Great Up-Leveling: What's Happening Now and How We Step Up

Haveyoueverfeltlikeyou'redri...

-

The Business of Plasma Donation: How the Process Works and Who the Key Players Are

Theterm"plasma"suffersfromas...

-

Zcash's Zombie Rally: The Price Prediction vs. What Reddit Is Saying

So,Zcashismovingagain.Mytime...

-

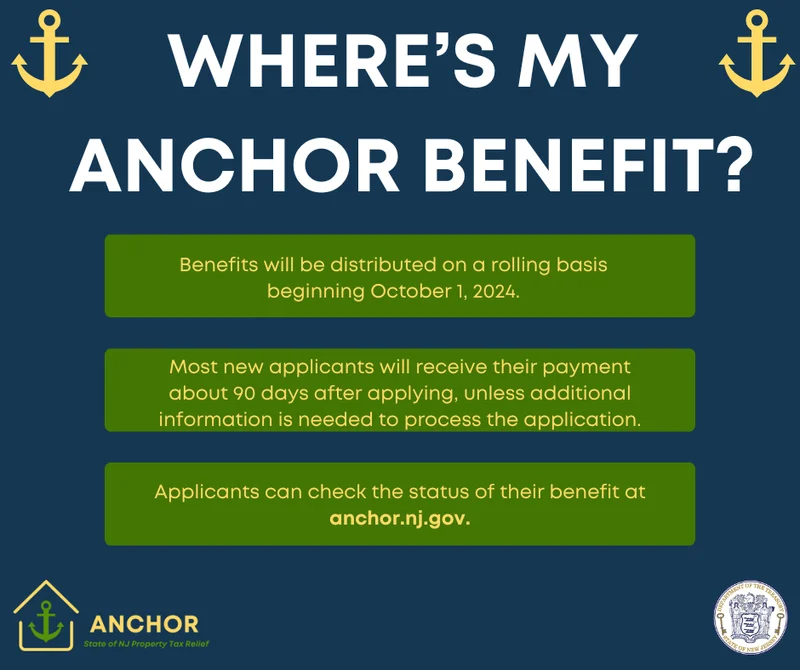

NJ's ANCHOR Program: A Blueprint for Tax Relief, Your 2024 Payment, and What Comes Next

NewJersey'sANCHORProgramIsn't...

- Search

- Recently Published

-

- Scott Bessent's 'Price Floor' Plan for China: What We Know and Why It's Pure Insanity

- Salesforce (CRM) Stock Surges on $60B Revenue Target: What the Forecast Means and If the Numbers Add Up

- The SMR Stock Gold Rush: What's Behind the Army Deal Hype and Is It All Just Hot Air?

- United Airlines Stock Drops on Mixed Q3 Results: Analyzing the Earnings Beat and Revenue Shortfall

- COOT Stock's Breakthrough Surge: Why It's Happening and What It Means for Our Future

- Tech Giants' $40B Aligned Data Centers Acquisition: Why This Is a Turning Point for AI's Future

- Turkey's "Steel Dome" Defense System: What It Is and Why It's a Game-Changer

- Mantra: A Quantitative Look at the Psychology and Actual Impact

- Nasdaq Index: Performance, Key Drivers, and Future Outlook

- Robert Herjavec's Million-Dollar Investment Strategy: The Surprising Answer and the Future-Proof Logic Behind It

- Tag list

-

- carbon trading (2)

- Blockchain (11)

- Decentralization (5)

- Smart Contracts (4)

- Cryptocurrency (26)

- DeFi (5)

- Bitcoin (29)

- Trump (5)

- Ethereum (8)

- Pudgy Penguins (5)

- NFT (5)

- Solana (5)

- cryptocurrency (6)

- XRP (3)

- Airdrop (3)

- MicroStrategy (3)

- Stablecoin (3)

- Digital Assets (3)

- PENGU (3)

- Plasma (5)

- Zcash (5)

- Aster (4)

- investment advisor (4)

- crypto exchange binance (3)

- SX Network (3)